Should we blame technology for the growth in healthcare spending? Austin Frakt, a healthcare economist who writes for the New York Times, thinks so. Citing several studies conducted over the last several years, he claims that technology could account for up to two-thirds of per capita healthcare spending growth.

In this piece, Frakt contrasts the contribution of technology to that of the ageing of the population. Frakt notes that age per se is a poor marker of costs associated with healthcare utilization. What’s important is the amount of money spent near death. If you’re 80 years old and healthy, your usage of healthcare services won’t be much more than that of a 40-year-old person.

So far, so good. But should we accept the proposition that technology is the culprit for healthcare spending growth? Says Frakt:

Every year you age, health care technology changes — usually for the better, but always at higher cost. Technology change is responsible for at least one-third and as much as two-thirds of per capita health care spending growth.

Frakt’s position is common among mainstream economists who come to their conclusions through the application of complex mathematical models of the economy. The studies Frakt cites all use statistical analysis to try to disentangle the relationships between a number of interacting cost factors (e.g., demographics, GDP growth, income growth, insurance growth, etc.) before drawing conclusions about the relative contribution of each of these factor.

The models, however, necessitate making assumptions that may not hold true. Moreover, technology spending is generally not measured directly. Instead, the models first explain spending on the basis of other measurable factors (e.g., demographics), and then attribute to technology the share of spending that remains “unexplained.”

But if we resist the seduction of quantitative models and, instead, apply common sense reasoning, it becomes apparent that the conclusion that technology per se drives the crisis of out-of-control spending growth is manifestly untenable.

To see this, it is helpful to imagine a simpler context where healthcare spending is decided voluntarily by patients and their families.

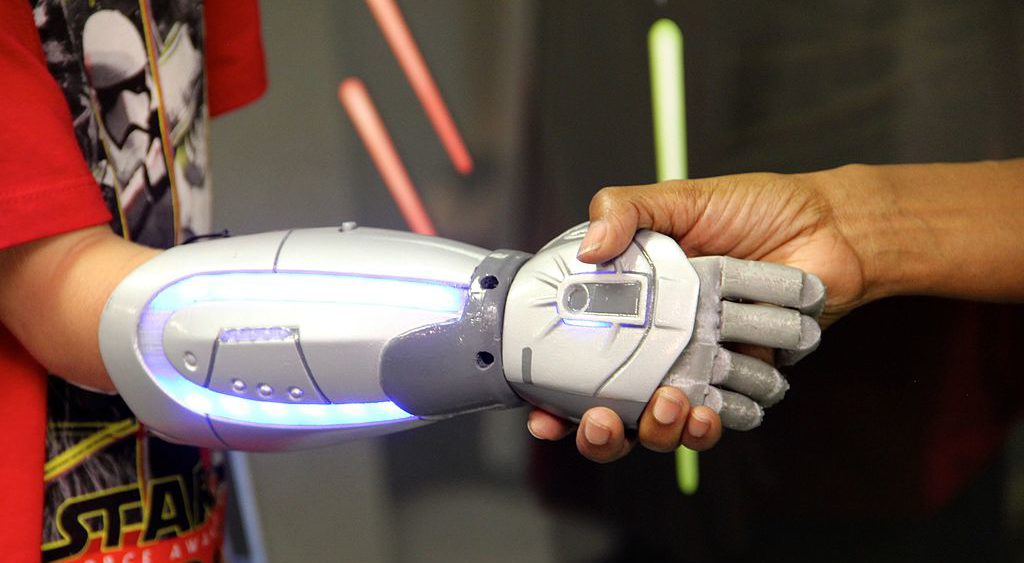

In such a context, a company may speculate that a particular technology (say, one that produces artificial limbs), could serve a certain need. The company then makes an entrepreneurial decision to develop, manufacture and sell artificial limbs on the basis of an estimate of the willingness of patients to pay for the limbs at a price sufficiently high to cover the costs of production and allow for some profit.

The technology company obviously takes a risk. It may err in its estimation of how patients will value its product: If the asking price is above the one patients are willing to pay, it will incur a loss and may go out of business. On the other hand, if the asking price is below the level at which patients value artificial limbs, the company will succeed and make a profit.

What is certain, however, is this: if the company succeeds and patients are willing to pay for the product, healthcare spending will increase, but that will not be viewed as a problem. If patients voluntarily pay for artificial limbs—or for bionic hearts, xeno-transplanted pancreata, or miracle longevity pills—it is because they value the technology more than the money they have parted with, or else they would keep the money. Overall welfare is increased, and there is no reason to blame technology.

Admittedly, some patients may later regret their purchase. But such a regret does not in itself indicate that technology is at fault for the increased spending. It simply means that those patients miscalculated the value they personally derived from the technology.

This potential for miscalculation is something many mainstream healthcare economists frown upon. In 1963, Nobel Prize-winner economist Kenneth Arrow gave fresh impetus to the field of healthcare economic theory in a seminal paper calling attention to this potential for miscalculation and attributing it to “product uncertainty:” Because of sickness, and because of the complexity of medical care and technology, patients are unable to make proper value decision. They can miscalculate in two ways.

First, producers and service providers may take advantage of the situation and obtain a higher price than would otherwise be established under normal “competitive” market mechanisms. Arrow (and many economists following him) therefore recommend various government regulations to mitigate the effect of this “information asymmetry.” (I have previously shown that the standard assumptions put forth by Arrow and others regarding the effects of information asymmetry in medical care are refuted by historical evidence.)

Second, patients may miscalculate in the other direction and forego technology that could potentially be beneficial to them. Healthcare economists also find this possibility intolerable and invariably favor government intervention to promote or finance health insurance so as to avoid self-rationing by patients.

The problem with these interventions, apart from their inherent paternalism, is that they do nothing to “bridge” the maligned information gap that can lead patients to miscalculate value. In fact, they widen it.

In the first instance, the regulation of technology means that regulators substitute their own value for those of patients. It is regulators who decide what level of evidence and what level of risk is acceptable for a technology to be legalized. In doing so, they deprive patients from even knowing about certain products. They thus make the information gap infinitely large.

In the second instance, the provision of health insurance impairs the ability of patients to make proper value decisions since they no longer bear the full cost (or even any cost) of the technology. Therefore, they are more likely to seek out technology that they might not have purchased at an unhampered market price.

The natural tendency for patients who are shielded from costs to over-utilize healthcare technologies naturally drives the price of technology upwards, so long as the insurer is willing to accommodate this demand. In most cases, in fact, insurance companies do end up paying for technology. This goes to show that Frakt and the modeling studies he cites have it exactly backwards: it is increased spending that causes increasingly high technology prices, not the other way around.

Mainstream healthcare economists have long minimized the potential for health insurance to lead to increased spending. In his same 1963 paper, published 2 years before the enactment of Medicare, Arrow had asserted that

The welfare case for insurance policies of all sorts is overwhelming. It follows that the government should undertake insurance in those cases where this market, for whatever reasons, has failed to emerge.

Arrow did consider that health insurance might increase demand for healthcare, but he minimized that possibility and left it to future economists to obtain empirical evidence to determine the extent to which so-called “moral hazard” (the tendency for insurance to increase demand) would affect prices in healthcare. With Arrow’s reassurance, the government embarked on a massive program that has subsidized the demand for not only healthcare technology, but for services and products across the entire healthcare sector.

Because economic analysis is poorly suited for empirical study (since the factors involved change constantly, may not be fully accounted for, and interact with one another), obtaining persuasive evidence for the effect of health insurance on spending has taken decades to materialize. Recently, however, Amy Finkelstein, a prominent MIT healthcare economist, was able to analyze a large set of historical data on spending patterns before and after the introduction of Medicare. In regards to the relationship between spending growth and technology, she commented that:

…there is widespread consensus that technological change is the driving force behind the growth in health spending. But this just kicks the can down the road. What then drives technological change in medicine?

…[In my recent study] I find evidence that the introduction of Medicare encouraged the adoption of new medical technologies…Now we find that when large-scale insurance changes lead to a big aggregate increase in demand, hospitals have an incentive to adopt new medical technologies. People will use these technologies because they are not paying for them out-of-pocket…

It therefore looks like insurance, by increasing demand because it lowers the price [to the patient] of medical care, encourages both the adoption of new technologies…and, further down the pipeline, the innovation and development of these new technologies.

In fact, Finkelstein showed that “the introduction of Medicare [caused] …enormous spending effects” and that “the spread of insurance played a very big role in driving health care spending growth over the second half of the twentieth century.”

Whether Finkelstein’s study will eventually persuade other economists, such as Frakt, remains to be seen. But it is noteworthy that her historical evidence is only confirming what should have been demonstrable by careful reasoning all along: subsidies raise prices and massive subsidies raise prices massively.

So here’s a paradox to conclude with. Compared to technology, ideas are cheap. But when bad ideas are concocted into a widely embraced but faulty economic theory, the result can be ruinously expensive.

So Professor Arrow wanted empirical evidence that people are not careful and prudent when they are spending some one else’s money?

You never know!

Excellent write-up on the inversion of blame for rising costs in healthcare.

You may also enjoy Milton Friedman’s excellent narrative on the subject, http://www.hoover.org/research/how-cure-health-care-0

Although written in 2001, it is tragically correct in its analysis of the reason for the rise in costs. His description of “Gammon’s Law” is one of my greatest discoveries and readings of 2016.

Here is an excerpt:

“Some years ago, the British physician Max Gammon, after an extensive study of the British system of socialized medicine, formulated what he called “the theory of bureaucratic displacement.” He observed that in “a bureaucratic system . . . increase in expenditure will be matched by fall in production. . . . Such systems will act rather like ‘black holes,’ in the economic universe, simultaneously sucking in resources, and shrinking in terms of ‘emitted production.’” Gammon’s observations for the British system have their exact parallel in the partly socialized U.S. medical system. Here, too, input has been going up sharply relative to output. This tendency can be documented particularly clearly for hospitals, thanks to the availability of high-quality data for a long period.

The data document a drastic decline in output over the past half century. From 1946 to 1996, the number of beds per 1,000 population fell by more than 60 percent; the fraction of beds occupied, by more than 20 percent. In sharp contrast, input skyrocketed. Hospital personnel per occupied bed multiplied ninefold, and cost per patient day, adjusted for inflation, an astounding fortyfold, from $30 in 1946 to $1,200 in 1996. A major engine of these changes was the enactment of Medicare and Medicaid in 1965. A mild rise in input was turned into a meteoric rise; a mild fall in output, into a rapid decline. Hospital days per person per year were cut by two-thirds, from three days in 1946 to an average of less than a day by 1996.”

Thanks! That’s a great piece by Friedman.

This was an excellent piece on a very difficult subject. I note that people on the left frequently take both sides on the question of how much extra cost insurance creates. Not that much is a preferred answer because of ideology, but when it comes to blaming physicians for over treatments suddenly they forget about their other argument.

I don’t think you can convince Frakt of anything because though he has some good ideas he frequently rationalizes untenable positions and he has thin skin to boot.

Thank you, Allan, I agree. Historically health insurance has been the invention of progressives, so they will mitigate its obvious effect on cost, unless they can blame greed or technology or some other evil…

One other aspect of the problem is the effect of third party payment on technology producers. When third parties pay and restrict utilization, producers are incentivized to develop high complexity, high cost technology rather than the reverse. No mass market for consumers, who are highly interested in information available through medical technology, can develop in a system where procedures are limited to performance in medical facilities, requiring an appointment and physician gatekeeper permission and a medical diagnosis. In ruminating over this issue in an essay I wrote a few years back it occurred to me that,

“it is interesting to note that two-dimensional echocardiography technology came into general use a few years before the marketing of the personal computer by IBM. Perhaps the comparison is unfair but on the surface at least the relative stagnation of cardiovascular ultrasound and EKG technology as opposed to the dramatic transformation of personalized computing during the same time span is startling.”

https://1drv.ms/w/s!Ar9MIXbYsvAygnfnoF4OpWoydKDT

Excellent points, Anthony.

I finally got around to looking up KardiaMobile by AliveCor. A very encouraging development in developing EKG technology marketed direct to consumers. They’re going for the mass market instead of the small universe of medical providers and insurance payers and so are cheap (could eventually be much cheaper as competition develops). I expect they’ll make a fortune. Look at the consumer reviews on the website. They reflect a smart, perceptive, intensely interested public. They warm the cockles of my liberty loving heart. This looks to me to be another breakthrough in the accelerating, under the radar revolution of escape of the public from their medical establishment/government masters. I’m strongly considering getting one for myself since I’m bugged by arrhythmias.